Introduction

One of the main reasons we decided to create ArtGuilds was the increasing impossibility of being able to share your work online on platforms that did not decide to use your work for their own A.i., or that put their own personal interests, above those of the very users who determined the value of the platform itself (ArtStation).

Having said that, it was imperative for us to create ArtGuilds with the safety of our artists in mind first.

Internal Measures & Tools

In order to protect the content placed on ArtGuilds via Sparks, we have for this purpose employed a whole series of measures, which although they do not guarantee total security (every image on the Internet, inevitably can be downloaded and used, regardless of any kind of protection), help to feel protected and certainly reduce the possible damage of dealing with the new A.i. version of the internet.

1.Keeping it off of the streets

First of all the Community is Closed from the outside. Despite it being a really tough decision for SEO reasons, it will allow guilders to avoid getting scraped by legibly by a.i. scraping bot, whose goal is to use public website images, texts and directories to get whatever content can be downloaded to train their a.i.

Basically now every website whose content is open to the public, let's say for example the artstation main page, can be scraped by a.i. companies' bots under the excuse of "legitimate interests".

2.Blocking out notorious A.i. Crawler Bots

Secondly we've also been blocking, using special directives, all the notorious a.i. crawler bots from hitting our website directly in our servers. Crawler bots are basically programs whose goal is to navigate the internet categorize and download data for some hidden or explicit interest. One of the most known is the google crawler bot that indexes pages on google. Since A.i. crawlers usually also change their name (to avoid in a legal manner user blocks), we have to keep monitoring for them each month.

3.Firewall for all of the needs, including "Bad bots"

We've also an antivirus that blocks "bad bots". These are bots that ingnores file directives and look for sensitive data no matter what you want (they existed way before a.i. invention). Their main intentions may not be scraping for generative a.i. but the results can still be used for that scope (indirect scraping).

4.External Protection

We've just implemented another "firewall" level protection, using an industry leader third party system to block crawlers that identify as "a.i. crawlers" and in general also bot that my hide themselves under other categories of bots (research crawlers, feed fetchers and so on..).

External Tools

In addition to these measures, there are two new tools for directly protecting one's content, developed by researchers at the University of Chicago, led by Professor Ben Y. Zhao, who, concerned about the countless harms these new models would mean for artists, used their knowledge and research in the field of artificial intelligence to develop open-source tools for artists.

Glaze

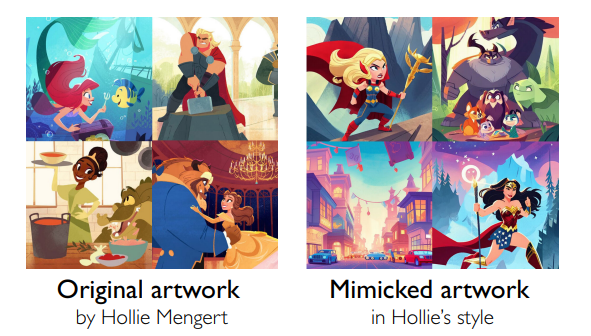

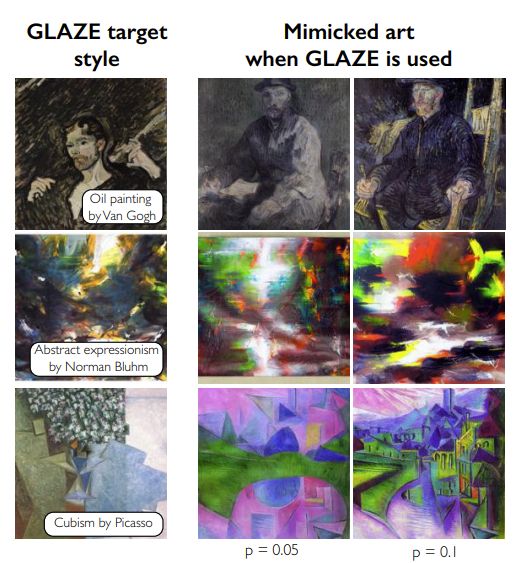

The first tool, Glaze, makes it possible to avoid imitation of one's own style

Basically, each a.i. model that ingests an image on which Glaze has been applied above will be received by the machine in a different way, that is, by matching a different reference to it.

It will still be ingegested and used by a.i. dataset, but no pieces of it will come out with your style when someone accurately prompts "painted in the style of *your name*". Therefore, if applied to all of your artworks, it should make Style Mimickery not possibile.

Download Glaze here (Windwos + OSX): https://glaze.cs.uchicago.edu/downloads.html

Nightshade

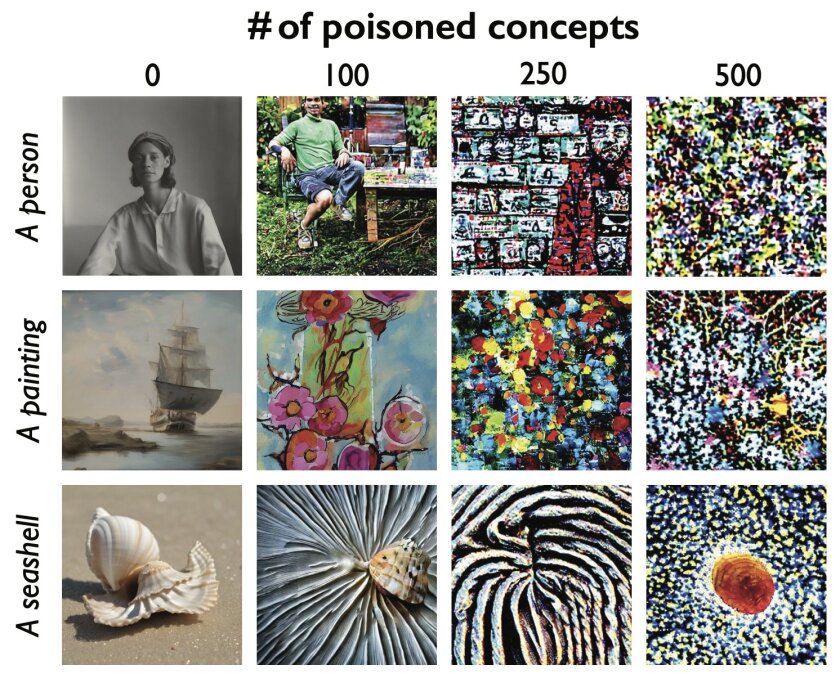

The second, newer Nightshade tool, on the other hand, allows you to poison your images with a "visual" poison that once "ingested, along with your image, by an a.i. model, will damage the model itself. A damaged model will no longer be able to create content (regardless of the prompt) properly. In short, it will regress.

The amount of poison for an a.i. model depends on the size of the model itself. In essence, the larger the model the more poison (ergo, poisoned images) it will take to actually damage the model. This is why a single image alone is effective but in a way that is not visible.

Again, the tools do not prevent images from being ingested, but rather prevent theft of your style and help defeat A.I. templates from within.

As with the nonremovable watermark, these systems can also be counterbalanced by new a.i. models trained precisely to nullify the negative effects (although at the moment there are none) but in the mean time the development team behind both Nightshade and Glaze, will be able to update their tools and counterbalance these threats.

Download Nightshade Here (Windows & OSX): https://nightshade.cs.uchicago.edu/downloads.html

Their direct integration into ArtGuilds.

We are actively considering the integration of these new tools directly into the uploading process of your artworks within ArtGuilds. However, the use of computing resources of this magnitude (given the potential content size of an artist platform) may make economically impossible to sustain the project itself, at least at this early stage where we have no income or grants or donations and we are bootstrapping with our own money.

However, in our future vision for ArtGuilds, it is already planned to find a sustainable system to ensure such protections also internally.